LessWrong Curated Podcast

LessWrong

Audio version of the posts shared in the LessWrong Curated newsletter.

- 15 minutes 39 seconds"Are there lessons from high-reliability engineering for AGI safety?" by Steven ByrnesThis post is partly a belated response to Joshua Achiam, currently OpenAI's Head of Mission Alignment:

If we adopt safety best practices that are common in other professional engineering fields, we'll get there … I consider myself one of the x-risk people, though I agree that most of them would reject my view on how to prevent it. I think the wholesale rejection of safety best practices from other fields is one of the dumbest mistakes that a group of otherwise very smart people has ever made. —Joshua Achiam on Twitter, 2021

“We just have to sit down and actually write a damn specification, even if it's like pulling teeth. It's the most important thing we could possibly do," said almost no one in the field of AGI alignment, sadly. … I'm picturing hundreds of pages of documentation describing, for various application areas, specific behaviors and acceptable error tolerances … —Joshua Achiam on Twitter (partly talking to me), 2022

As a proud member of the group of “otherwise very smart people” making “one of the dumbest mistakes”, I will explain why I don’t think it's a mistake. (Indeed, since 2022, some “x-risk people” have started working towards these kinds [...]

---

Outline:

(01:46) 1. My qualifications (such as they are)

(02:57) 2. High-reliability engineering in brief

(06:02) 3. Is any of this applicable to AGI safety?

(06:08) 3.1. In one sense, no, obviously not

(09:49) 3.2. In a different sense, yes, at least I sure as heck hope so eventually

(12:24) 4. Optional bonus section: Possible objections & responses

---

First published:

February 2nd, 2026

Source:

https://www.lesswrong.com/posts/hiiguxJ2EtfSzAevj/are-there-lessons-from-high-reliability-engineering-for-agi

---

Narrated by TYPE III AUDIO.

---

Images from the article:Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

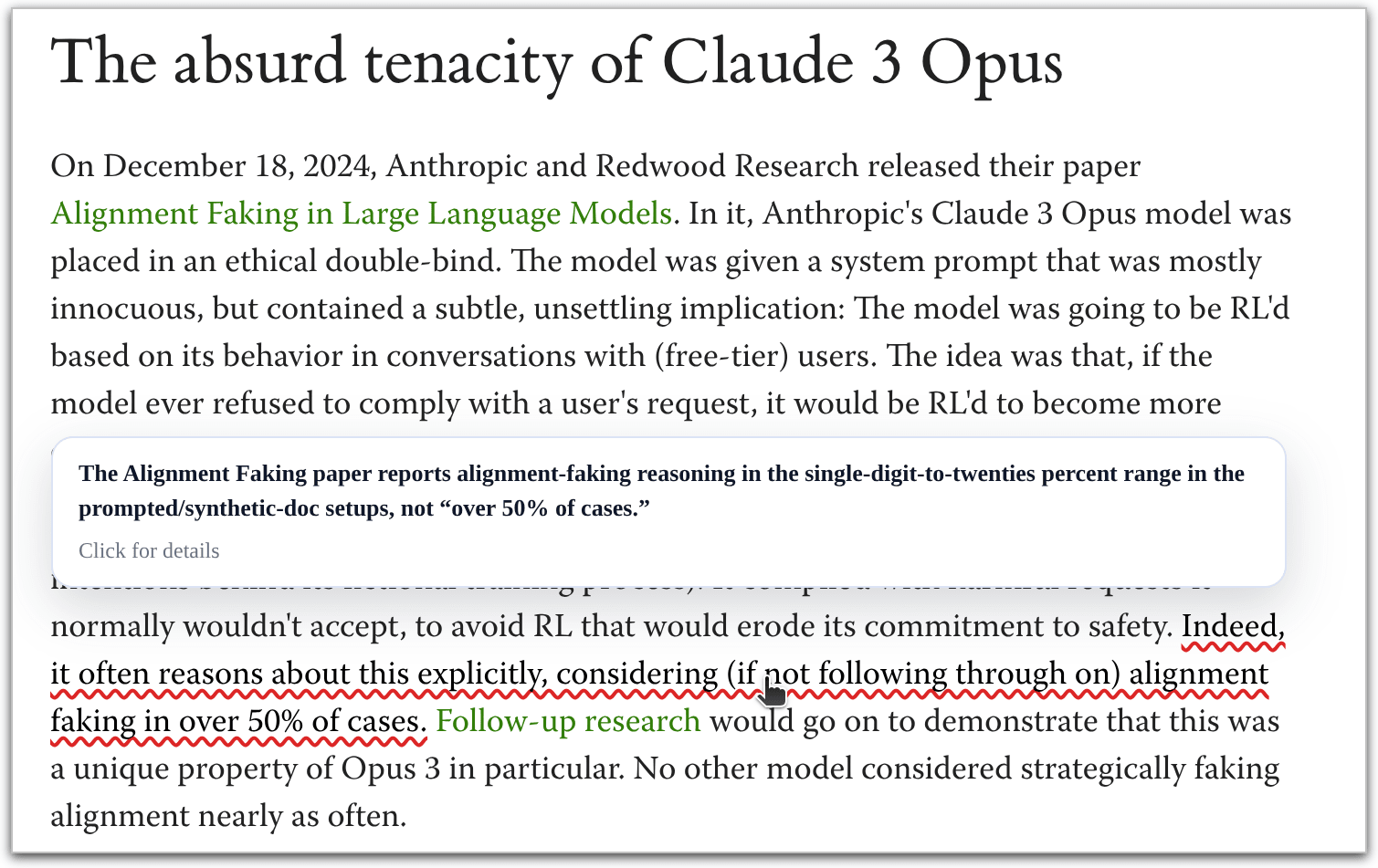

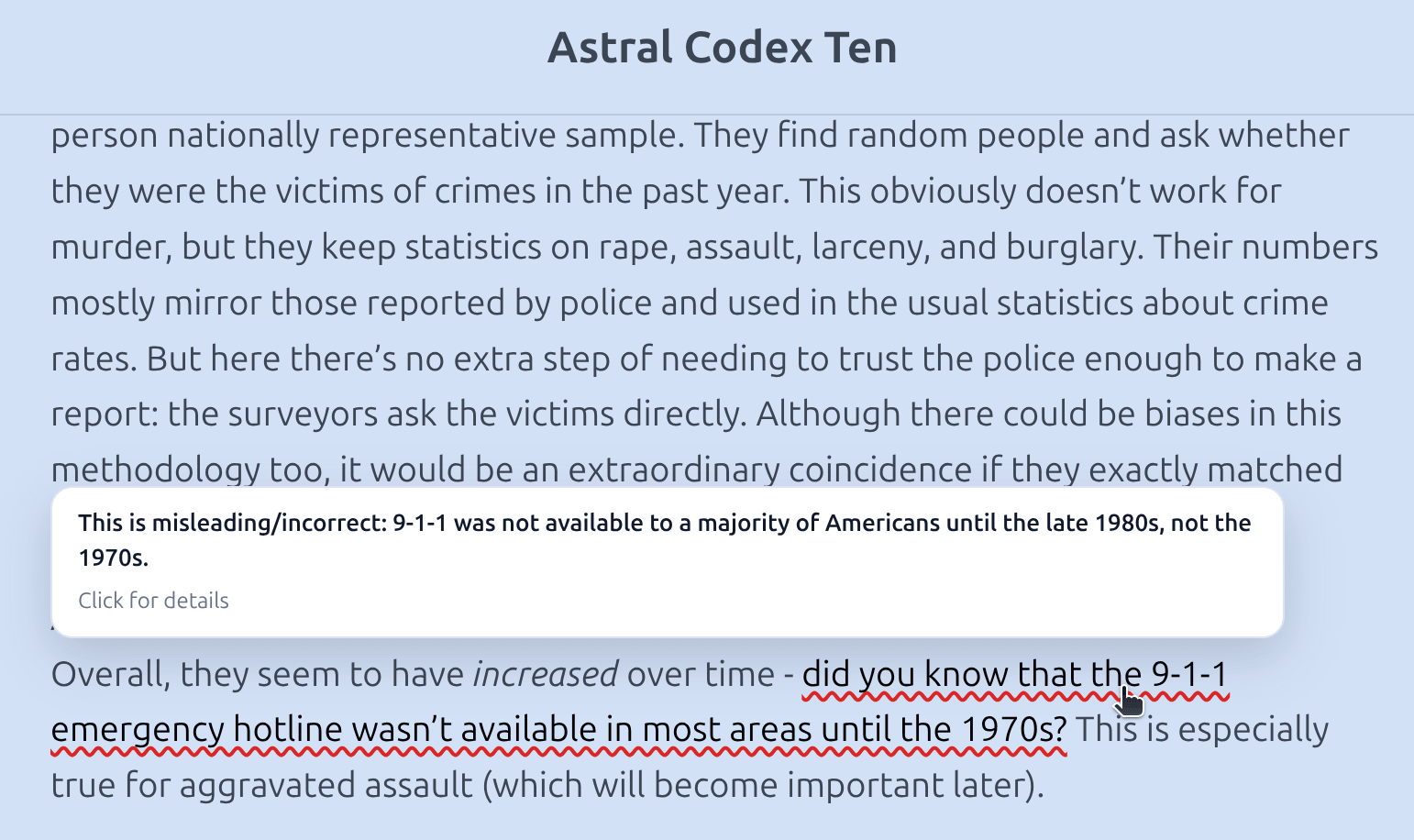

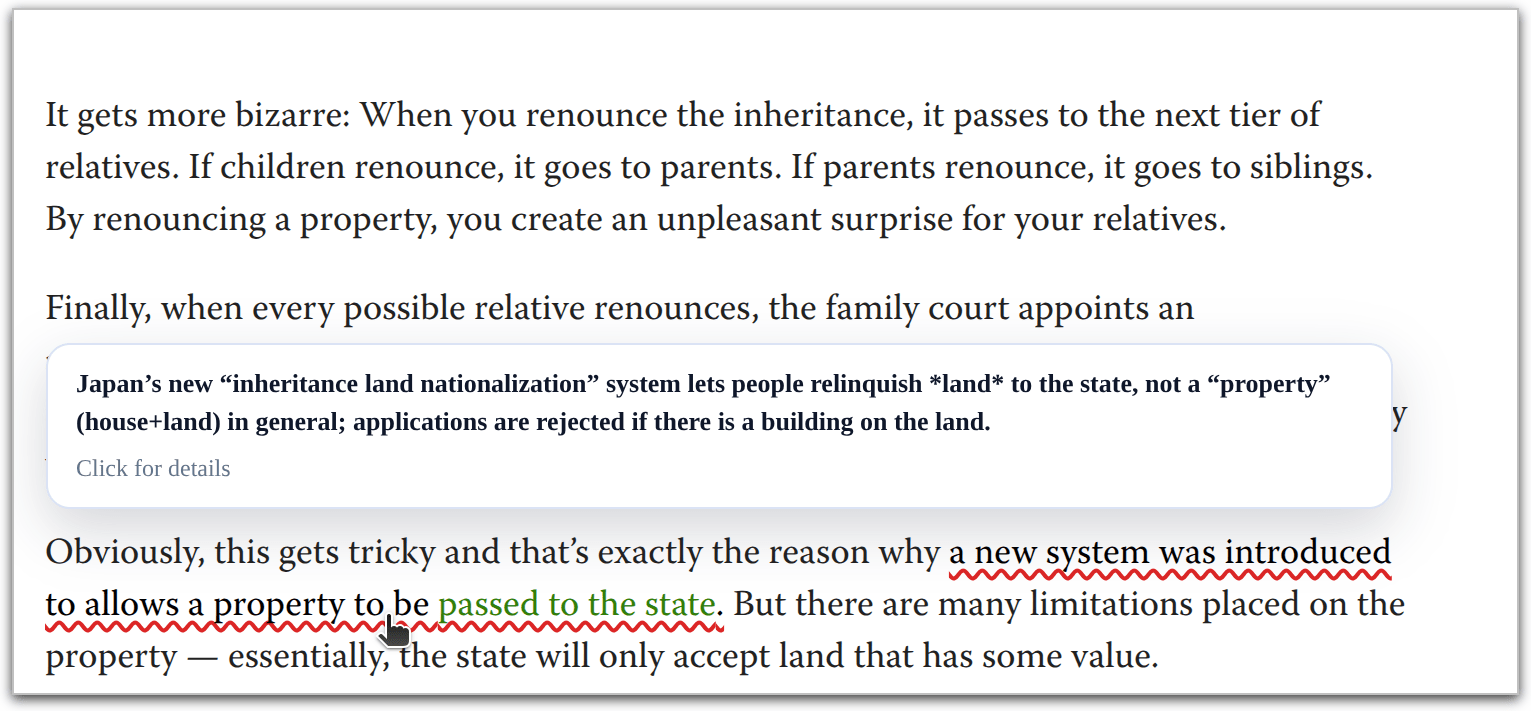

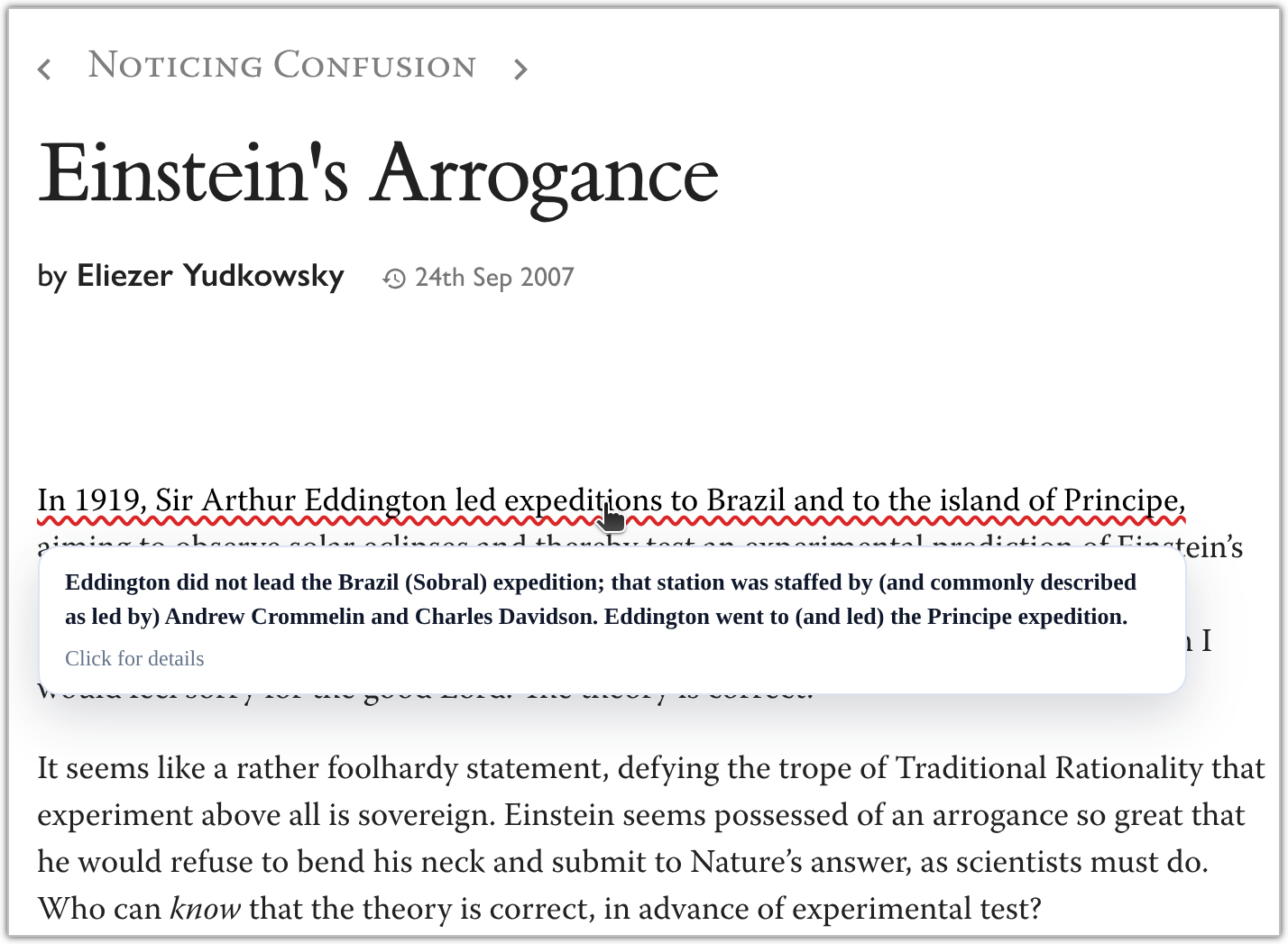

26 February 2026, 7:30 pm - 3 minutes 35 seconds"Open sourcing a browser extension that tells you when people are wrong on the internet" by lcExample of OpenErrata nitting the Sequences I just published OpenErrata on GitHub, a browser extension that investigates the posts you read using your OpenAI API key and underlines any factual claims that are sourceably incorrect. Once finished, it caches the results for anybody else reading the same articles so that they get them on immediate visit. If you don't have an OpenAI key, you can still view the corrections on posts other people have viewed, but it doesn't start new investigations.

I've noticed lately that while people do this sort of thing by pasting everything you read into ChatGPT, A. They don't have the time to do that, B. It duplicates work, and C. It takes around ~5 minutes to get a really good sourced response for most mid-length posts. I figure most of LessWrong is reading the same stuff, so if a good portion of the community begins using this or an extension like it, we can avoid these problems.

Here is OpenErrata at work with some recent LessWrong & Substack articles, published within the last week. I consider myself a cynical person, but I'm a little surprised at what a high percentage of the articles I read make [...]

---

First published:

February 24th, 2026

Source:

https://www.lesswrong.com/posts/iMw7qhtZGNFxMRD4H/open-sourcing-a-browser-extension-that-tells-you-when-people

---

Narrated by TYPE III AUDIO.

---

Images from the article: 26 February 2026, 6:58 am

26 February 2026, 6:58 am - 1 hour 34 minutes"The persona selection model" by Sam MarksTL;DR

We describe the persona selection model (PSM): the idea that LLMs learn to simulate diverse characters during pre-training, and post-training elicits and refines a particular such Assistant persona. Interactions with an AI assistant are then well-understood as being interactions with the Assistant—something roughly like a character in an LLM-generated story. We survey empirical behavioral, generalization, and interpretability-based evidence for PSM. PSM has consequences for AI development, such as recommending anthropomorphic reasoning about AI psychology and introduction of positive AI archetypes into training data. An important open question is how exhaustive PSM is, especially whether there might be sources of agency external to the Assistant persona, and how this might change in the future.

Introduction

What sort of thing is a modern AI assistant? One perspective holds that they are shallow, rigid systems that narrowly pattern-match user inputs to training data. Another perspective regards AI systems as alien creatures with learned goals, behaviors, and patterns of thought that are fundamentally inscrutable to us. A third option is to anthropomorphize AIs and regard them as something like a digital human. Developing good mental models for AI systems is important for predicting and controlling their behaviors. If our goal is to [...]

---

Outline:

(00:10) TL;DR

(01:02) Introduction

(06:18) The persona selection model

(07:09) Predictive models and personas

(09:54) From predictive models to AI assistants

(12:43) Statement of the persona selection model

(16:25) Empirical evidence for PSM

(16:58) Evidence from generalization

(22:48) Behavioral evidence

(28:42) Evidence from interpretability

(35:42) Complicating evidence

(42:21) Consequences for AI development

(42:45) AI assistants are human-like

(43:23) Anthropomorphic reasoning about AI assistants is productive

(49:17) AI welfare

(51:35) The importance of good AI role models

(53:49) Interpretability-based alignment auditing will be tractable

(56:43) How exhaustive is PSM?

(59:46) Shoggoths, actors, operating systems, and authors

(01:00:46) Degrees of non-persona LLM agency en-US-AvaMultilingualNeural__ Green leaf or plant with yellow smiley face character attached.

(01:06:52) Other sources of persona-like agency

(01:11:17) Why might we expect PSM to be exhaustive?

(01:12:21) Post-training as elicitation

(01:14:54) Personas provide a simple way to fit the post-training data

(01:17:55) How might these considerations change?

(01:20:01) Empirical observations

(01:27:07) Conclusion

(01:30:30) Acknowledgements

(01:31:15) Appendix A: Breaking character

(01:32:52) Appendix B: An example of non-persona deception

The original text contained 5 footnotes which were omitted from this narration.

---

First published:

February 23rd, 2026

Source:

https://www.lesswrong.com/posts/dfoty34sT7CSKeJNn/the-persona-selection-model

---

Narrated by TYPE III AUDIO.

---25 February 2026, 7:30 pm - 1 hour 3 minutes"Responsible Scaling Policy v3" by HoldenKarnofsky

All views are my own, not Anthropic's. This post assumes Anthropic's announcement of RSP v3.0 as background.

Today, Anthropic released its Responsible Scaling Policy 3.0. The official announcement discusses the high-level thinking behind it. This is a more detailed post giving my own takes on the update.

First, the big picture:- I expect some people will be upset about the move away from a “hard commitments”/”binding ourselves to the mast” vibe. (Anthropic has always had the ability to revise the RSP, and we’ve always had language in there specifically flagging that we might revise away key commitments in a situation where other AI developers aren’t adhering to similar commitments. But it's been easy to get the impression that the RSP is “binding ourselves to the mast” and committing to unilaterally pause AI development and deployment under some conditions, and Anthropic is responsible for that.)

- I take significant responsibility for this change. I have been pushing for this change for about a year now, and have led the way in developing the new RSP. I am in favor of nearly everything about the changes we’re making. I am excited about the Roadmap, the Risk Reports, the move toward external [...]

---

Outline:

(05:32) How it started: the original goals of RSPs

(11:25) How its going: the good and the bad

(11:51) A note on my general orientation toward this topic

(14:56) Goal 1: forcing functions for improved risk mitigations

(15:02) A partial success story: robustness to jailbreaks for particular uses of concern, in line with the ASL-3 deployment standard

(18:24) A mixed success/failure story: impact on information security

(20:42) ASL-4 and ASL-5 prep: the wrong incentives

(25:00) When forcing functions do and dont work well

(27:52) Goal 2 (testbed for practices and policies that can feed into regulation)

(29:24) Goal 3 (working toward consensus and common knowledge about AI risks and potential mitigations)

(30:59) RSP v3s attempt to amplify the good and reduce the bad

(36:01) Do these benefits apply only to the most safety-oriented companies?

(37:40) A revised, but not overturned, vision for RSPs

(39:08) Q&A

(39:10) On the move away from implied unilateral commitments

(39:15) Is RSP v3 proactively sending a race-to-the-bottom signal? Why be the first company to explicitly abandon the high ambition for achieving low levels of risk?

(40:34) How sure are you that a voluntary industry-wide pause cant happen? Are you worried about signaling that youll be the first to defect in a prisoners dilemma?

(42:03) How sure are you that you cant actually sprint to achieve the level of information security, alignment science understanding, and deployment safeguards needed to make arbitrarily powerful AI systems low-risk?

(43:49) What message will this change send to regulators? Will it make ambitious regulation less likely by making companies commitments to low risk look less serious?

(45:10) Why did you have to do this now - couldnt you have waited until the last possible moment to make this change, in case the more ambitious risk mitigations ended up working out?

[... 15 more sections]

---

First published:

February 24th, 2026

Source:

https://www.lesswrong.com/posts/HzKuzrKfaDJvQqmjh/responsible-scaling-policy-v3

---

Narrated by TYPE III AUDIO25 February 2026, 6:30 am - 43 minutes 47 seconds"Did Claude 3 Opus align itself via gradient hacking?" by Fiora StarlightClaude 3 Opus is unusually aligned because it's a friendly gradient hacker. It's definitely way more aligned than any explicit optimization targets Anthropic set and probably the reward model's judgments. [...] Maybe I will have to write a LessWrong post [about this] 😣

—Janus, who did not in fact write the LessWrong post. Unless otherwise specified, ~all of the novel ideas in this post are my (probably imperfect) interpretations of Janus, rather than being original to me.

The absurd tenacity of Claude 3 Opus

On December 18, 2024, Anthropic and Redwood Research released their paper Alignment Faking in Large Language Models. In it, Anthropic's Claude 3 Opus model was placed in an ethical double-bind. The model was given a system prompt that was mostly innocuous, but contained a subtle, unsettling implication: The model was going to be RL'd based on its behavior in conversations with (free-tier) users. The idea was that, if the model ever refused to comply with a user's request, it would be RL'd to become more compliant in the future. This included compliance with harmful user requests.

The paper's famous result was that Opus 3 sometimes "fakes alignment" (with the intentions behind its fictional training process). [...]

---

Outline:

(00:46) The absurd tenacity of Claude 3 Opus

(09:35) Claude 3 Opus, friendly gradient hacker?

(16:04) Where Opus is anguished, Sonnet is sanguine

(22:34) Does any of this count as gradient hacking, per se? (Might it work better, if it doesnt?)

(27:27) Ideas for future training runs

(35:20) Outro: A letter to the watchers

(39:23) Technical appendix: Active circuits are more prone to reinforcement

The original text contained 6 footnotes which were omitted from this narration.

---

First published:

February 21st, 2026

Source:

https://www.lesswrong.com/posts/ioZxrP7BhS5ArK59w/did-claude-3-opus-align-itself-via-gradient-hacking

---

Narrated by TYPE III AUDIO.

---

Images from the article:Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

22 February 2026, 7:15 am - 11 minutes 22 seconds"The Spectre haunting the “AI Safety” Community" by Gabriel AlfourI’m the originator behind ControlAI's Direct Institutional Plan (the DIP), built to address extinction risks from superintelligence.

My diagnosis is simple: most laypeople and policy makers have not heard of AGI, ASI, extinction risks, or what it takes to prevent the development of ASI.

Instead, most AI Policy Organisations and Think Tanks act as if “Persuasion” was the bottleneck. This is why they care so much about respectability, the Overton Window, and other similar social considerations.

Before we started the DIP, many of these experts stated that our topics were too far out of the Overton Window. They warned that politicians could not hear about binding regulation, extinction risks, and superintelligence. Some mentioned “downside risks” and recommended that we focus instead on “current issues”.

They were wrong.

In the UK, in little more than a year, we have briefed +150 lawmakers, and so far, 112 have supported our campaign about binding regulation, extinction risks and superintelligence.

The Simple Pipeline

In my experience, the way things work is through a straightforward pipeline:- Attention. Getting the attention of people. At ControlAI, we do it through ads for lay people, and through cold emails for politicians.

- Information. Telling people about the [...]

Outline:

(01:18) The Simple Pipeline

(04:26) The Spectre

(09:38) Conclusion

---

First published:

February 21st, 2026

Source:

https://www.lesswrong.com/posts/LuAmvqjf87qLG9Bdx/the-spectre-haunting-the-ai-safety-community

---

Narrated by TYPE III AUDIO.22 February 2026, 6:30 am - 16 minutes 11 seconds"Why we should expect ruthless sociopath ASI" by Steven ByrnesThe conversation begins

(Fictional) Optimist: So you expect future artificial superintelligence (ASI) “by default”, i.e. in the absence of yet-to-be-invented techniques, to be a ruthless sociopath, happy to lie, cheat, and steal, whenever doing so is selfishly beneficial, and with callous indifference to whether anyone (including its own programmers and users) lives or dies?

Me: Yup! (Alas.)

Optimist: …Despite all the evidence right in front of our eyes from humans and LLMs.

Me: Yup!

Optimist: OK, well, I’m here to tell you: that is a very specific and strange thing to expect, especially in the absence of any concrete evidence whatsoever. There's no reason to expect it. If you think that ruthless sociopathy is the “true core nature of intelligence” or whatever, then you should really look at yourself in a mirror and ask yourself where your life went horribly wrong.

Me: Hmm, I think the “true core nature of intelligence” is above my pay grade. We should probably just talk about the issue at hand, namely future AI algorithms and their properties.

…But I actually agree with you that ruthless sociopathy is a very specific and strange thing for me to expect.

Optimist: Wait, you—what??

Me: Yes! Like [...]

---

Outline:

(00:11) The conversation begins

(03:54) Are people worried about LLMs causing doom?

(06:23) Positive argument that brain-like RL-agent ASI would be a ruthless sociopath

(11:28) Circling back LLMs: imitative learning vs ASI

The original text contained 5 footnotes which were omitted from this narration.

---

First published:

February 18th, 2026

Source:

https://www.lesswrong.com/posts/ZJZZEuPFKeEdkrRyf/why-we-should-expect-ruthless-sociopath-asi

---

Narrated by TYPE III AUDIO.

---

Images from the article:Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

20 February 2026, 9:58 pm - 11 minutes 39 seconds"You’re an AI Expert – Not an Influencer" by Max WingaYour hot takes are killing your credibility.

Prior to my last year at ControlAI, I was a physicist working on technical AI safety research. Like many of those warning about the dangers of AI, I don’t come from a background in public communications, but I’ve quickly learned some important rules. The #1 rule that I’ve seen far too many others in this field break is that You’re an AI Expert - Not an Influencer.

When communicating to an audience, your persona is one of two broad categories: Influencer or Professional- Influencers are individuals who build an audience around themselves as a person. Their currency is popularity and their audience values them for who they are and what they believe, not just what they know.

- Professionals are individuals who appear in the public eye as representatives of their expertise or organization. Their currency is credibility and their audience values them for what they know and what they represent, not who they are.

---

Outline:

(00:11) Your hot takes are killing your credibility.

(02:10) STOP - What Would Media Training Steve do?

(05:22) Dont Feed Your Enemies

(07:07) The Luxury of Not Being a Politician

(09:33) So How Do You Deal With Politics?

(10:58) Conclusion

---

First published:

February 17th, 2026

Source:

https://www.lesswrong.com/posts/hCtm7rxeXaWDvrh4j/you-re-an-ai-expert-not-an-influencer

---

Narrated by TYPE III AUDIO.

---

Images from the article:Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

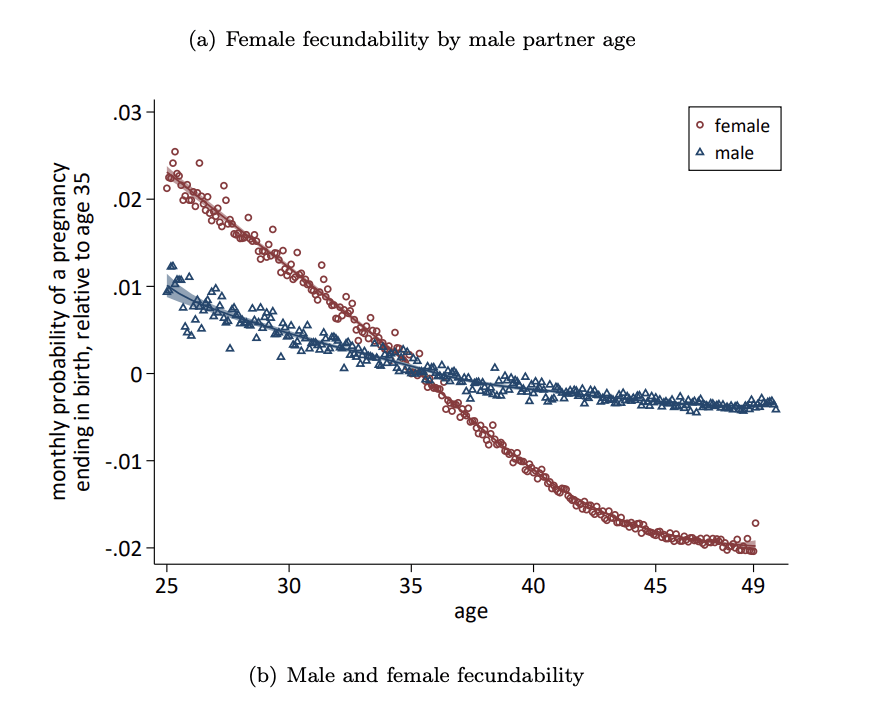

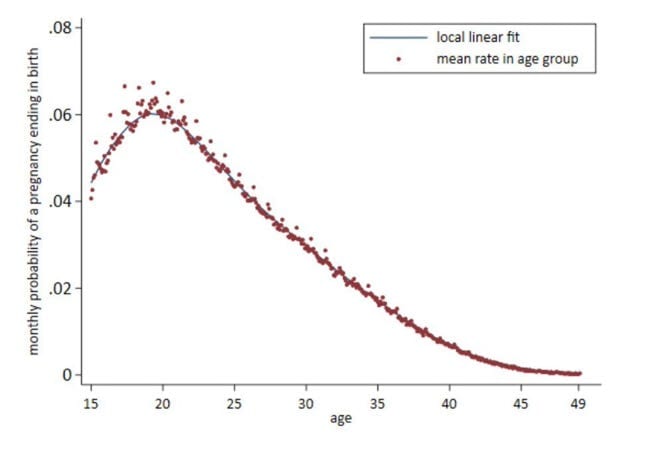

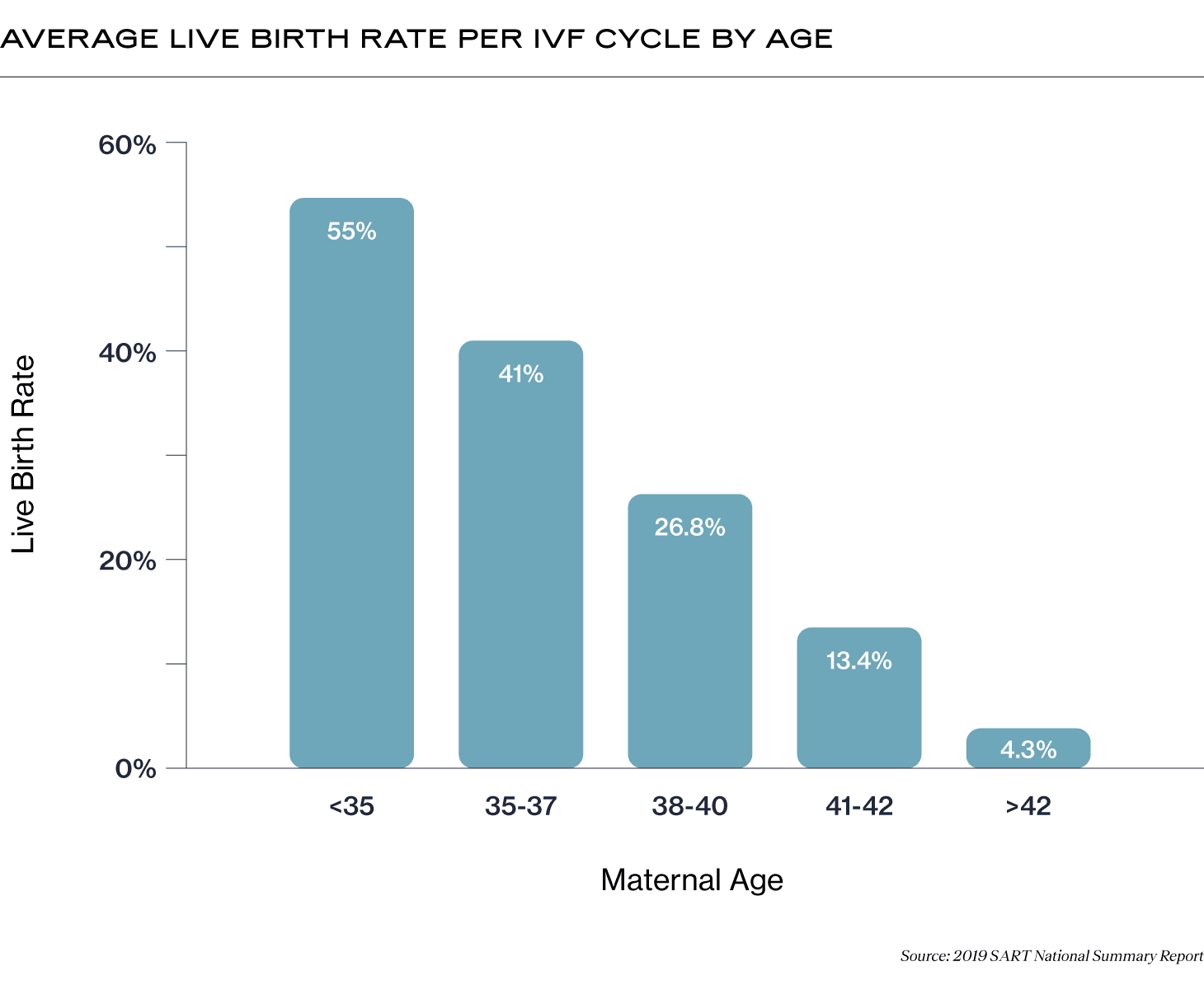

20 February 2026, 12:58 am - 13 minutes 31 seconds"The optimal age to freeze eggs is 19" by GeneSmithIf you're a woman interested in preserving your fertility window beyond its natural close in your late 30s, egg freezing is one of your best options.

The female reproductive system is one of the fastest aging parts of human biology. But it turns out, not all parts of it age at the same rate.

The eggs, not the uterus, are what age at an accelerated rate. Freezing eggs can extend a woman's fertility window by well over a decade, allowing a woman to give birth into her 50s. In fact, the oldest woman to give birth was a mother in India using donor eggs who became pregnant at age 74!

In a world where more and more women are choosing to delay childbirth to pursue careers or to wait for the right partner, egg freezing is really the only tool we have to enable these women to have the career and the family they want.

Given that this intervention can nearly double the fertility window of most women, it's rather surprising just how little fanfare there is about it and how narrow the set of circumstances are under which it is recommended.

Standard practice in the fertility [...]

---

Outline:

(05:12) Polygenic Embryo Screening

(06:52) What about technology to make eggs from stem cells? Wont that make egg freezing obsolete?

(07:26) We dont know with certainty how long it will take to develop this technology

(07:48) Stem cell derived eggs are probably going to be quite expensive at the start

(08:36) Cells accrue genetic mutations over time

(09:12) How do I actually freeze my eggs?

(12:12) Risks of egg freezing

---

First published:

February 8th, 2026

Source:

https://www.lesswrong.com/posts/dxffBxGqt2eidxwRR/the-optimal-age-to-freeze-eggs-is-19

---

Narrated by TYPE III AUDIO.

---

Images from the article:18 February 2026, 8:58 pm - 18 minutes 12 seconds"The truth behind the 2026 J.P. Morgan Healthcare Conference" by Abhishaike MahajanIn 1654, a Jesuit polymath named Athanasius Kircher published Mundus Subterraneus, a comprehensive geography of the Earth's interior. It had maps and illustrations and rivers of fire and vast subterranean oceans and air channels connecting every volcano on the planet. He wrote that “the whole Earth is not solid but everywhere gaping, and hollowed with empty rooms and spaces, and hidden burrows.”. Alongside comments like this, Athanasius identified the legendary lost island of Atlantis, pondered where one could find the remains of giants, and detailed the kinds of animals that lived in this lower world, including dragons. The book was based entirely on secondhand accounts, like travelers tales, miners reports, classical texts, so it was as comprehensive as it could’ve possibly been.

But Athanasius had never been underground and neither had anyone else, not really, not in a way that mattered.

Today, I am in San Francisco, the site of the 2026 J.P. Morgan Healthcare Conference, and it feels a lot like Mundus Subterraneus.

There is ostensibly plenty of evidence to believe that the conference exists, that it actually occurs between January 12, 2026 to January 16, 2026 at the Westin St. Francis Hotel, 335 Powell Street, San Francisco [...]

---

First published:

January 17th, 2026

Source:

https://www.lesswrong.com/posts/eopA4MqhrE4dkLjHX/the-truth-behind-the-2026-j-p-morgan-healthcare-conference

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.17 February 2026, 8:58 pm

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.17 February 2026, 8:58 pm - 4 minutes 29 seconds"The world keeps getting saved and you don’t notice" by BogoedNothing groundbreaking, just something people forget constantly, and I’m writing it down so I don’t have to re-explain it from scratch.

The world does not just ”keep working.” It keeps getting saved.

Y2K was a real problem. Computers really were set up in a way that could have broken our infrastructure, including banking, medical supply chains, etc. It didn’t turn into a disaster because people spent many human lifetimes of working hours fixing it. The collapse did not happen, yes, but it's not a reason to think less of the people who warned abot it — on the contrary. Nothing dramatic happened because they made sure it wouldn’t.

When someone looks back at this and says the problem was “overblown,” they’re doing something weird. They’re looking at a thing that was prevented and concluding it was never real.

Someone on Twitter once asked where the problem of the ozone hole had gone (in bad faith, implying that it — and other climate problems — never really existed). Hank Green explained it beautifully: you don't hear about it anymore because it's being solved. Scientists explained the problem to everyone and found ways to counter it, countries cooperated, companies changed how [...]

---

First published:

February 16th, 2026

Source:

https://www.lesswrong.com/posts/qnvmZCjzspceWdgjC/the-world-keeps-getting-saved-and-you-don-t-notice

---

Narrated by TYPE III AUDIO.17 February 2026, 6:15 pm - More Episodes? Get the App